Reread Computer System a programmer's perspective Chapter_1

A review of rereading chapter 1.

Contents of Chapter 1:

- Information Is Bits + Context

- Programs Are Translated by Other Programs into Different Forms

- It Pays to Understand How Compilation System Work

- Processors Read and Interpret Instructions Stored in Memory

- Caches Matter

- Storage Devices Form a Hierarchy

- The Operating System Manages the Hardware

- Systems Communicate with Other Systems Using Networks

- Important Themes

- Summary

In Chapter 1, the author briefly introduces what will be involved in the whole book, and the core topic is abstraction in my opinion.

And here are several points which I haven’t thought of before.

Firstly, what is information? The author tells us information is Bits+Context. Maybe this is even more important in age of big data. While programming, context must be considered carefully. It is a good way to produce codes with good performance.

Secondly, all I/O devices are regarded as files in modern operating systems. Obviously, it is a high abstraction and such ideas could be rather helpful for programmers.

Here are some knowledge points to review:

- There are two kinds of file text file(consist of ASCII characters) and binary file(All other files).

- The flow of compilation: (a C program example)

- Preprocessing phase: generate another C program according directives(begin with #) with the .i suffix

- Compilation phase: translate the text file to another text file with the .s suffix which contains an assembly language program

- Assembly phase: From assembly language To machine language(binary file with .o suffix)

- Linking phase: generate executable object file linking some standard library.

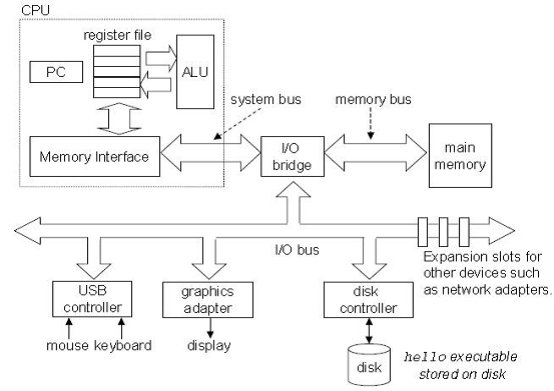

- Hardware Organization of a System:

- Multi-level cache. (Smaller->faster)

- Abstraction is provided by OS

- Virtual memory

- Concurrency and Parallelism

- Thread-Level Concurrency

- Instruction-Level Concurrency

- Single-Instruction, Multiple-Data (SIMD) Parallelism

- Three fundamental abstractions:

- Files –> I/O devices

- Virtual memory –> Both main memory and disks

- Processes –> the processor, main memory and I/O device

Also the differences between concurrency and parallelism should be noticed:

- Concurrency is a macroscopic concept which means several processes can run at the same time with one CPU, (share one CPU).

- Parallelism means several processes can run via several CPUs at the same time, (each owns one CPU).